At first I have a problem of deleted partitions, one of those partitions is part of a RAID0 (the first RAID) and it can no longer mount the RAID. I've read several threads here in the forum and tried several procedures in Quick / Deep Scan TestDisk without success.

I will describe what was done before, during and after. I ask you to be somebody help me, they are sensitive data and should not even be in a RAID 0.

This RAID was fully functional on a machine that had two SATA hard drives, both of 1TB. RAID was set up in software through the mdadm on the first drive (/dev/sda) was SWAP, GRUB, OS (Debian 7) and space for RAID partition. In the second drive (/dev/sdb) all the space was reserved for RAID partition.

One day, I had a problem in one of our XEN and migrated the HD server (also 1TB) for the RAID server and hard drives were placed in the following order:

sda -> XEN

sdb -> Disk 0 RAID 0 (0, 1)

sdc -> Disk 1 RAID 0 (0, 1)

Well, the XEN rose on the machine where there RAID mounted Definitvamente and I did not remember who had assembled structure in RAID or how exactly was. So I started searching again in order to mount the RAID0 now in XenServer Operating System (CentOS), only for my unhappiness, I ran the following commands:

sgdisk --mbrtogpt --clear /dev/sdb

sgdisk --gpttombr --clear /dev/sdb

Do not ask me why, I'm not to run commands at random without looking at each parameter, but this time it was. To my not suspresa, when I look at the disk partitions /dev/sdb, I had nothing left.

Searching for a way to recover the partitions came across TestDisk, and I've been betting all my chips on it so far.

I downloaded here:

http://www.cgsecurity.org/wiki/TestDisk_Download

I saw the step by step (http://www.cgsecurity.org/wiki/TestDisk_Step_By_Step) and various topics on recovery partitions.

Well, I used the quick search and it always appear two unrecoverable partitions with the following message

Code: Select all

The harddisk (1000 GB / 931 GiB) seems too small! (<2499 GB / 2328 GiB)

After confirming with "Enter" me was the following partitions displayed:

Code: Select all

results

Linux Swap 0 32 33 121 157 36 1951744

SWAP2 version 1, pagesize = 4096, 999 MB / 953 MiB

Linux 121 157 37 183 211 28 999424

ext4 blocksize = 1024 Sparse_SB Recover, 511 MB / 488 MiB

Linux 183 211 29 12341 105 52 195311616

ext4 blocksize = 4096 Large_file Sparse_SB, 99 GB / 93 GiB

Linux RAID 12341 105 53 12341 138 21 2048 [srv-bkp: 0]

md 1.x L.Endian Raid 0 - Array Slot 0 (0, 1), 1048 KB / 1024 KiBNow in the /dev/sdb is like this:

Code: Select all

[Root @ XenServer-jpa ~] # fdisk -l /dev/sdb

WARNING: GPT (GUID Partition Table) detected on /dev/sdb '! The util fdisk does not support GPT. Use GNU Parted.

Disk /dev/sdb: 1000.2 GB, 1000204886016 bytes

255 heads, 63 sectors / track, 121601 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 122 975872 82 Linux swap / Solaris

Partition 1 does not end on cylinder boundary.

/dev/sdb2 * 122,184 499,712 83 Linux

Partition 2 does not end on cylinder boundary.

/dev/sdb3 184 12342 97655808 83 Linux

/dev/sdb4 12342 12342 1024 fd Linux RAID Auto Detection

[Root @ XenServer-jpa ~] #Code: Select all

[Root @ XenServer-jpa ~] # fdisk -l /dev/sdc

Disk /dev/sdc: 1000.2 GB, 1000204886016 bytes

255 heads, 63 sectors / track, 121601 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdc1 1 121 602 976 760 832 fd Linux RAID Auto Detection

[Root @ XenServer-jpa ~] #/dev/sdb4

Code: Select all

[Root @ XenServer-jpa ~] # mdadm --examine /dev/sdb4

/dev/sdb4:

Magic: a92b4efc

Version: 1.2

Feature Map: 0x0

Array UUID: 0848cb9e: 454c2524: 755e039b: 6040829e

Name: srv-bkp: 0

Creation Time: Fri Feb 20 12:34:27 2015

Raid Level: raid0

Raid Devices: 2

Avail Dev Size: 1755258864 (836.97 GiB 898.69 GB)

Used Dev Size: 0

Data Offset: 16 sectors

Super Offset: 8 sectors

State: clean

Device UUID: c35bf73c: 8749dcbf: 69532198: e79ebea4

Update Time: Fri Feb 20 12:34:27 2015

Checksum: 59aac454 - correct

Events: 0

Chunk Size: 512K

Array Slot 0 (0, 1)

Array State: Uu

[Root @ XenServer-jpa ~] #Code: Select all

[Root @ XenServer-jpa ~] # mdadm --examine /dev/sdc1

/dev/sdc1:

Magic: a92b4efc

Version: 1.2

Feature Map: 0x0

Array UUID: 0848cb9e: 454c2524: 755e039b: 6040829e

Name: srv-bkp: 0

Creation Time: Fri Feb 20 12:34:27 2015

Raid Level: raid0

Raid Devices: 2

Avail Dev Size: 1953521648 (931.51 GiB 1000.20 GB)

Used Dev Size: 0

Data Offset: 16 sectors

Super Offset: 8 sectors

State: clean

Device UUID: 029fc76a: 1e5b94c4: 42713a6b: e3bc72e0

Update Time: Fri Feb 20 12:34:27 2015

Checksum: a6d19500 - correct

Events: 0

Chunk Size: 512K

Slot Array: 1 (0, 1)

Array State: uU

[Root @ XenServer-jpa ~] #Code: Select all

[Root @ XenServer-jpa ~] # mdadm --examine --scan

ARRAY /dev/md/0 level = raid0 metadata = 1.2 in a 2 devices = UUID = 0848cb9e: 454c2524: 755e039b: 6040829e name = srv-bkp: 0Code: Select all

[Root @ XenServer-jpa ~] # cat / proc / mdstat

Personalities: [raid0]

md0: inactive sdc1 [1]

976760320 super blocks 1.2

unused devices: <none>

[Root @ XenServer-jpa ~] #Code: Select all

[Root @ XenServer-jpa mnt] # mdadm --assemble --scan -f

mdadm: failed to add /dev/sdb4 to /dev/md0: Invalid argument

mdadm: /dev/md0 assembled from 1 drive - not enough to start the array.

[Root @ XenServer-jpa mnt] #Code: Select all

Sat Apr 23 07:20:13 2016

Command line: TestDisk

TestDisk 7.0, Data Recovery Utility, April 2015

Christophe GRENIER <grenier@cgsecurity.org>

http://www.cgsecurity.org

OS: Linux, kernel 2.6.32.43-0.4.1.xs1.8.0.835.170778xen (#1 SMP Wed May 29 18:06:30 EDT 2013) i686

Compiler: GCC 4.4

Compilation date: 2015-04-18T13:03:42

ext2fs lib: 1.42.8, ntfs lib: libntfs-3g, reiserfs lib: 0.3.1-rc8, ewf lib: 20120504, curses lib: ncurses 5.7

/dev/sda: LBA, HPA, LBA48, DCO support

/dev/sda: size 1953525168 sectors

/dev/sda: user_max 1953525168 sectors

/dev/sda: native_max 1953525168 sectors

/dev/sdb: LBA, HPA, LBA48, DCO support

/dev/sdb: size 1953525168 sectors

/dev/sdb: user_max 1953525168 sectors

/dev/sdb: native_max 1953525168 sectors

/dev/sdc: LBA, HPA, LBA48, DCO support

/dev/sdc: size 1953525168 sectors

/dev/sdc: user_max 1953525168 sectors

/dev/sdc: native_max 1953525168 sectors

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-MGT: LBA, HPA, LBA48, DCO support

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-MGT: size 8192 sectors

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-MGT: user_max 1953525168 sectors

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-MGT: native_max 1953525168 sectors

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--aeafc60d--762d--4ccb--a446--682478685caf: LBA, HPA, LBA48, DCO support

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--aeafc60d--762d--4ccb--a446--682478685caf: size 315203584 sectors

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--aeafc60d--762d--4ccb--a446--682478685caf: user_max 1953525168 sectors

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--aeafc60d--762d--4ccb--a446--682478685caf: native_max 1953525168 sectors

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--e1a68623--81ef--4aad--9b54--ded3acadeea0: LBA, HPA, LBA48, DCO support

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--e1a68623--81ef--4aad--9b54--ded3acadeea0: size 9142272 sectors

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--e1a68623--81ef--4aad--9b54--ded3acadeea0: user_max 1953525168 sectors

/dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--e1a68623--81ef--4aad--9b54--ded3acadeea0: native_max 1953525168 sectors

Warning: can't get size for Disk /dev/mapper/control - 0 B - 0 sectors, sector size=512

Warning: can't get size for Disk /dev/md0 - 0 B - CHS 1 2 4, sector size=512

Hard disk list

Disk /dev/sda - 1000 GB / 931 GiB - CHS 121601 255 63, sector size=512 - ST1000LM024 HN-M101MBB, S/N:S33PJ5BF200326, FW:2BA30001

Disk /dev/sdb - 1000 GB / 931 GiB - CHS 121601 255 63, sector size=512 - ST1000DM003-1CH162, S/N:Z1D8R94C, FW:CC47

Disk /dev/sdc - 1000 GB / 931 GiB - CHS 121601 255 63, sector size=512 - ST1000DM003-1CH162, S/N:S1DJH0TE, FW:CC49

Disk /dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-MGT - 4194 KB / 4096 KiB - 8192 sectors, sector size=512 - ST1000LM024 HN-M101MBB, S/N:S33PJ5BF200326, FW:2BA30001

Disk /dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--372aca08--e0d7--41fb--8e08--babaaabbc3a6 - 215 GB, sector size=512

Disk /dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--a8022c19--43e1--4f30--8edd--b0a9d1532a41 - 215 GB, sector size=512

Disk /dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--aeafc60d--762d--4ccb--a446--682478685caf - 161 GB, sector size=512 - ST1000LM024 HN-M101MBB, S/N:S33PJ5BF200326, FW:2BA30001

Disk /dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--b2868f3c--9704--43ed--afee--04315db846bc - 215 GB, sector size=512

Disk /dev/mapper/VG_XenStorage--1e056ad7--f607--d883--3401--468c0d559ef4-VHD--e1a68623--81ef--4aad--9b54--ded3acadeea0 - 4680 M, sector size=512 - ST1000LM024 HN-M101MBB, S/N:S33PJ5BF200326, FW:2BA30001

Partition table type (auto): EFI GPT

Disk /dev/sdb - 1000 GB / 931 GiB - ST1000DM003-1CH162

Partition table type: Intel

Analyse Disk /dev/sdb - 1000 GB / 931 GiB - CHS 121601 255 63

Geometry from i386 MBR: head=255 sector=63

check_part_i386 1 type EE: no test

Current partition structure:

1 P EFI GPT 0 0 2 121601 80 63 1953525167

No partition is bootable

search_part()

Disk /dev/sdb - 1000 GB / 931 GiB - CHS 121601 255 63

Linux Swap 0 32 33 121 157 20 1951728

SWAP2 version 1, pagesize=4096, 999 MB / 952 MiB

recover_EXT2: s_block_group_nr=0/61, s_mnt_count=30/4294967295, s_blocks_per_group=8192, s_inodes_per_group=2048

recover_EXT2: s_blocksize=1024

recover_EXT2: s_blocks_count 499712

recover_EXT2: part_size 999424

Linux 121 157 37 183 211 28 999424

ext4 blocksize=1024 Sparse_SB Recover, 511 MB / 488 MiB

recover_EXT2: s_block_group_nr=0/745, s_mnt_count=31/4294967295, s_blocks_per_group=32768, s_inodes_per_group=8192

recover_EXT2: s_blocksize=4096

recover_EXT2: s_blocks_count 24413952

recover_EXT2: part_size 195311616

Linux 183 211 29 12341 105 52 195311616

ext4 blocksize=4096 Large_file Sparse_SB, 99 GB / 93 GiB

Raid magic value at 12341/105/53

Raid apparent size: 1525474534 sectors

srv-bkp:0 md 1.x L.Endian Raid 0 - Array Slot : 0 (0, 1)

Linux RAID 12341 105 53 12341 105 60 8 [srv-bkp:0]

md 1.x L.Endian Raid 0 - Array Slot : 0 (0, 1), 4096 B

Raid magic value at 12341/105/61

Raid apparent size: 1525474534 sectors

srv-bkp:0 md 1.x L.Endian Raid 0 - Array Slot : 0 (0, 1)

Linux RAID 12341 105 53 12341 105 60 8 [srv-bkp:0]

md 1.x L.Endian Raid 0 - Array Slot : 0 (0, 1), 4096 B

recover_EXT2: s_block_group_nr=0/14904, s_mnt_count=1/4294967295, s_blocks_per_group=32768, s_inodes_per_group=8192

recover_EXT2: s_blocksize=4096

recover_EXT2: s_blocks_count 488380160

recover_EXT2: part_size 3907041280

Linux 60714 159 54 303916 178 6 3907041280

ext4 blocksize=4096 Large_file Sparse_SB Recover, 2000 GB / 1863 GiB

This partition ends after the disk limits. (start=975380480, size=3907041280, end=4882421759, disk end=1953525168)

recover_EXT2: s_block_group_nr=0/14904, s_mnt_count=1/4294967295, s_blocks_per_group=32768, s_inodes_per_group=8192

recover_EXT2: s_blocksize=4096

recover_EXT2: s_blocks_count 488380160

recover_EXT2: part_size 3907041280

Linux 60716 7 28 303918 25 43 3907041280

ext4 blocksize=4096 Large_file Sparse_SB Recover, 2000 GB / 1863 GiB

This partition ends after the disk limits. (start=975403008, size=3907041280, end=4882444287, disk end=1953525168)

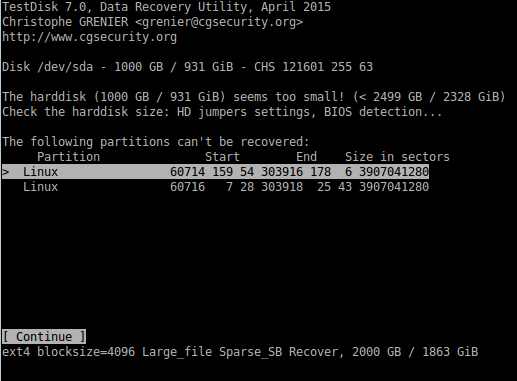

Disk /dev/sdb - 1000 GB / 931 GiB - CHS 121601 255 63

Check the harddisk size: HD jumpers settings, BIOS detection...

The harddisk (1000 GB / 931 GiB) seems too small! (< 2499 GB / 2328 GiB)

The following partitions can't be recovered:

Linux 60714 159 54 303916 178 6 3907041280

ext4 blocksize=4096 Large_file Sparse_SB Recover, 2000 GB / 1863 GiB

Linux 60716 7 28 303918 25 43 3907041280

ext4 blocksize=4096 Large_file Sparse_SB Recover, 2000 GB / 1863 GiB

Results

Linux Swap 0 32 33 121 157 36 1951744

SWAP2 version 1, pagesize=4096, 999 MB / 953 MiB

Linux 121 157 37 183 211 28 999424

ext4 blocksize=1024 Sparse_SB Recover, 511 MB / 488 MiB

Linux 183 211 29 12341 105 52 195311616

ext4 blocksize=4096 Large_file Sparse_SB, 99 GB / 93 GiB

Linux RAID 12341 105 53 12341 138 21 2048 [srv-bkp:0]

md 1.x L.Endian Raid 0 - Array Slot : 0 (0, 1), 1048 KB / 1024 KiB

Hint for advanced users. dmsetup may be used if you prefer to avoid to rewrite the partition table for the moment:

echo "0 1951744 linear /dev/sdb 2048" | dmsetup create test0

echo "0 999424 linear /dev/sdb 1953792" | dmsetup create test1

echo "0 195311616 linear /dev/sdb 2953216" | dmsetup create test2

echo "0 2048 linear /dev/sdb 198264832" | dmsetup create test3

dir_partition inode=2

* Linux 121 157 37 183 211 28 999424

ext4 blocksize=1024 Sparse_SB Recover, 511 MB / 488 MiB

Directory /

2 drwxr-xr-x 0 0 1024 20-Feb-2015 12:53 .

2 drwxr-xr-x 0 0 1024 20-Feb-2015 12:53 ..

11 drwx------ 0 0 12288 20-Feb-2015 12:34 lost+found

18 -rw-r--r-- 0 0 129281 12-Jan-2015 19:45 config-3.2.0-4-amd64

16 -rw-r--r-- 0 0 2841344 12-Jan-2015 19:34 vmlinuz-3.2.0-4-amd64

65537 drwxr-xr-x 0 0 7168 20-Feb-2015 12:54 grub

17 -rw-r--r-- 0 0 2114479 12-Jan-2015 19:45 System.map-3.2.0-4-amd64

12 -rw-r--r-- 0 0 11076227 20-Feb-2015 12:53 initrd.img-3.2.0-4-amd64

X 12 -rw-r--r-- 0 0 11076227 20-Feb-2015 12:53 initrd.img-3.2.0-4-amd64.new

X 17 -rw-r--r-- 0 0 2114479 12-Jan-2015 19:45 System.map-3.2.0-4-amd64.dpkg-new

X 12 -rw-r--r-- 0 0 11076227 20-Feb-2015 12:53 System.map-3.2.0-4-amd64.dpkg-tmp

X 18 -rw-r--r-- 0 0 129281 12-Jan-2015 19:45 config-3.2.0-4-amd64.dpkg-new

X 13 -rw-r--r-- 0 0 0 20-Feb-2015 12:53 config-3.2.0-4-amd64.dpkg-tmp

add_ext_part_i386: max

add_ext_part_i386: max

interface_write()

1 E extended 0 32 32 121 157 36 1951745

2 * Linux 121 157 37 183 211 28 999424

3 P Linux 183 211 29 12341 105 52 195311616

4 P Linux RAID 12341 105 53 12341 138 21 2048 [srv-bkp:0]

5 L Linux Swap 0 32 33 121 157 36 1951744

simulate write!

write_mbr_i386: starting...

write_all_log_i386: starting...

write_all_log_i386: CHS: 0/32/32,lba=2047

Analyse Disk /dev/sdb - 1000 GB / 931 GiB - CHS 121601 255 63

Geometry from i386 MBR: head=255 sector=63

check_part_i386 1 type EE: no test

Current partition structure:

1 P EFI GPT 0 0 2 121601 80 63 1953525167

No partition is bootable

search_part()

Disk /dev/sdb - 1000 GB / 931 GiB - CHS 121601 255 63

Linux Swap 0 32 33 121 157 20 1951728

SWAP2 version 1, pagesize=4096, 999 MB / 952 MiB

recover_EXT2: s_block_group_nr=0/61, s_mnt_count=30/4294967295, s_blocks_per_group=8192, s_inodes_per_group=2048

recover_EXT2: s_blocksize=1024

recover_EXT2: s_blocks_count 499712

recover_EXT2: part_size 999424

Linux 121 157 37 183 211 28 999424

ext4 blocksize=1024 Sparse_SB Recover, 511 MB / 488 MiB

recover_EXT2: s_block_group_nr=0/745, s_mnt_count=31/4294967295, s_blocks_per_group=32768, s_inodes_per_group=8192

recover_EXT2: s_blocksize=4096

recover_EXT2: s_blocks_count 24413952

recover_EXT2: part_size 195311616

Linux 183 211 29 12341 105 52 195311616

ext4 blocksize=4096 Large_file Sparse_SB, 99 GB / 93 GiB

Raid magic value at 12341/105/53

Raid apparent size: 1525474534 sectors

srv-bkp:0 md 1.x L.Endian Raid 0 - Array Slot : 0 (0, 1)

Linux RAID 12341 105 53 12341 105 60 8 [srv-bkp:0]

md 1.x L.Endian Raid 0 - Array Slot : 0 (0, 1), 4096 B

Raid magic value at 12341/105/61

Raid apparent size: 1525474534 sectors

srv-bkp:0 md 1.x L.Endian Raid 0 - Array Slot : 0 (0, 1)

Linux RAID 12341 105 53 12341 105 60 8 [srv-bkp:0]

md 1.x L.Endian Raid 0 - Array Slot : 0 (0, 1), 4096 B

Search for partition aborted

Results

Linux Swap 0 32 33 121 157 36 1951744

SWAP2 version 1, pagesize=4096, 999 MB / 953 MiB

Linux 121 157 37 183 211 28 999424

ext4 blocksize=1024 Sparse_SB Recover, 511 MB / 488 MiB

Linux 183 211 29 12341 105 52 195311616

ext4 blocksize=4096 Large_file Sparse_SB, 99 GB / 93 GiB

Linux RAID 12341 105 53 12341 138 21 2048 [srv-bkp:0]

md 1.x L.Endian Raid 0 - Array Slot : 0 (0, 1), 1048 KB / 1024 KiB

Hint for advanced users. dmsetup may be used if you prefer to avoid to rewrite the partition table for the moment:

echo "0 1951744 linear /dev/sdb 2048" | dmsetup create test0

echo "0 999424 linear /dev/sdb 1953792" | dmsetup create test1

echo "0 195311616 linear /dev/sdb 2953216" | dmsetup create test2

echo "0 2048 linear /dev/sdb 198264832" | dmsetup create test3

dir_partition inode=2

P Linux 183 211 29 12341 105 52 195311616

ext4 blocksize=4096 Large_file Sparse_SB, 99 GB / 93 GiB

Directory /

2 drwxr-xr-x 0 0 4096 20-Feb-2015 12:54 .

2 drwxr-xr-x 0 0 4096 20-Feb-2015 12:54 ..

11 drwx------ 0 0 16384 20-Feb-2015 12:34 lost+found

1310721 drwxr-xr-x 0 0 4096 20-Feb-2015 12:34 var

262145 drwxr-xr-x 0 0 4096 20-Feb-2015 12:34 boot

1048577 drwxr-xr-x 0 0 4096 18-Apr-2016 14:27 etc

1441793 drwxr-xr-x 0 0 4096 20-Feb-2015 12:37 media

12 lrwxrwxrwx 0 0 26 20-Feb-2015 12:37 vmlinuz

3670017 drwxr-xr-x 0 0 4096 20-Feb-2015 12:54 sbin

2097153 drwxr-xr-x 0 0 4096 20-Feb-2015 12:34 usr

4718593 drwxr-xr-x 0 0 4096 20-Feb-2015 12:51 lib

131073 drwxr-xr-x 0 0 4096 20-Feb-2015 12:40 lib64

3932161 drwxr-xr-x 0 0 4096 10-Jun-2012 03:35 selinux

3407873 drwxr-xr-x 0 0 4096 20-Feb-2015 12:49 bin

3145729 drwxr-xr-x 0 0 4096 11-Jun-2014 18:07 proc

5505025 drwxr-xr-x 0 0 4096 20-Feb-2015 12:36 dev

1179649 drwxr-xr-x 0 0 4096 11-Jun-2014 18:07 mnt

393217 drwxr-xr-x 0 0 4096 14-Jul-2013 14:24 sys

3276801 drwxrwxrwt 0 0 4096 18-Apr-2016 23:17 tmp

4587521 drwx------ 0 0 4096 11-Jun-2015 19:20 root

4325377 drwxr-xr-x 0 0 4096 20-Feb-2015 12:54 home

2752513 drwxr-xr-x 0 0 4096 20-Feb-2015 12:54 run

2490369 drwxr-xr-x 0 0 4096 20-Feb-2015 12:34 srv

2359297 drwxr-xr-x 0 0 4096 11-Jun-2015 19:02 opt

13 lrwxrwxrwx 0 0 30 20-Feb-2015 12:37 initrd.img

14 -rw------- 0 0 256 20-Feb-2015 12:54 .pulse-cookie

655361 drwx------ 0 0 4096 18-Apr-2016 23:16 .pulse

interface_write()

1 P Linux Swap 0 32 33 121 157 36 1951744

2 * Linux 121 157 37 183 211 28 999424

3 P Linux 183 211 29 12341 105 52 195311616

4 P Linux RAID 12341 105 53 12341 138 21 2048 [srv-bkp:0]

write!

write_mbr_i386: starting...

write_all_log_i386: starting...

No extended partition

You will have to reboot for the change to take effect.

TestDisk exited normally.